Wearable Devices CUE1®

January 31, 2024

Taiwan

January 31, 2024Association of Artificial Intelligence–Aided Chest Radiograph Interpretation with Reader Performance and Efficiency

To evaluate whether a deep learning–based artificial intelligence (AI) engine used concurrently can improve reader performance and efficiency in interpreting chest radiograph abnormalities.

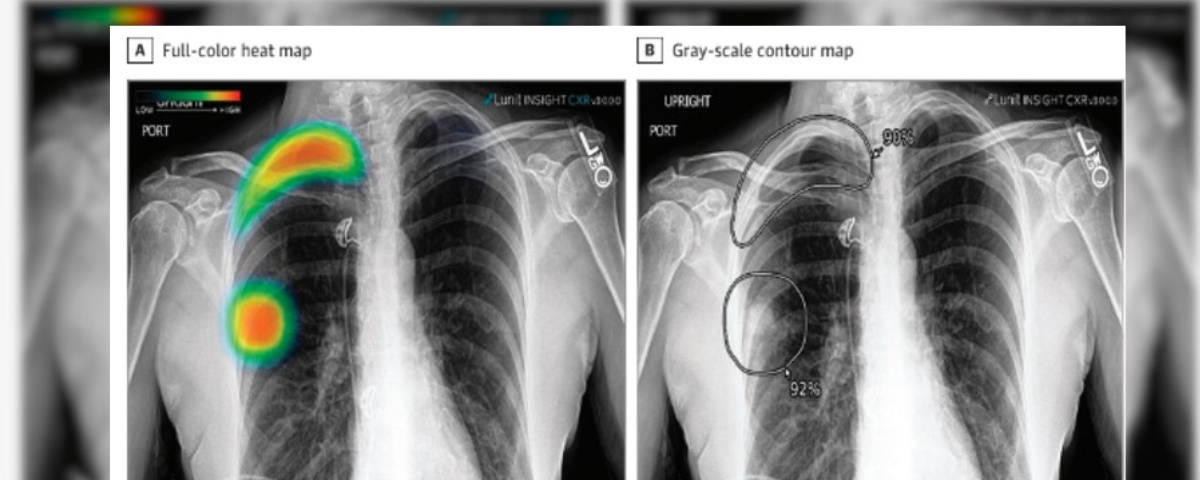

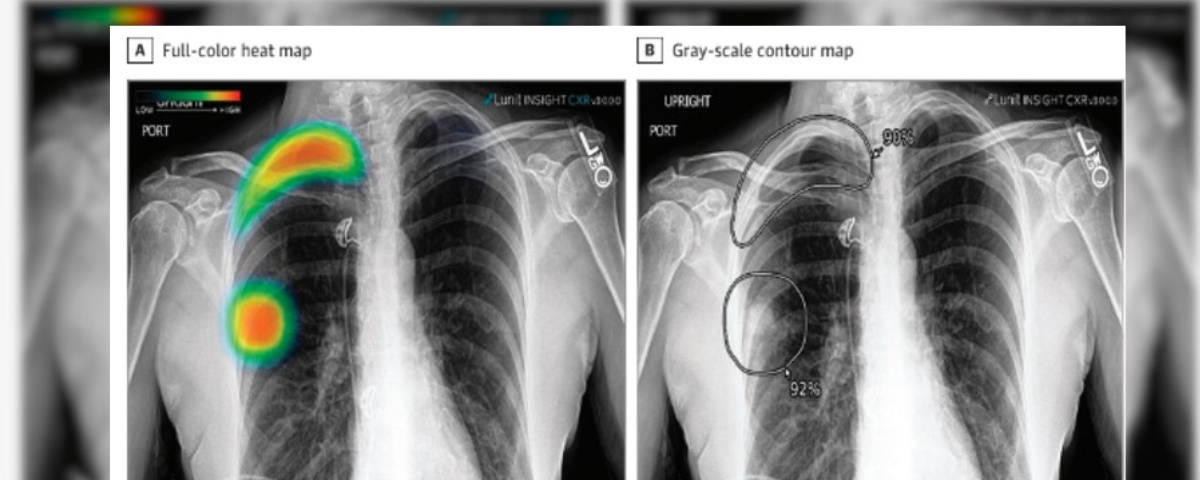

This multicenter cohort study was conducted from April to November 2021 and involved radiologists, including attending radiologists, thoracic radiology fellows, and residents, who independently participated in 2 observer performance test sessions. The sessions included a reading session with AI and a session without AI, in a randomized crossover manner with a 4-week washout period in between. The AI produced a heat map and the image-level probability of the presence of the referrable lesion. The data used were collected at 2 quaternary academic hospitals in Boston, Massachusetts: Beth Israel Deaconess Medical Center (The Medical Information Mart for Intensive Care Chest X-Ray [MIMIC-CXR]) and Massachusetts General Hospital (MGH).

The ground truths for the labels were created via consensual reading by 2 thoracic radiologists. Each reader documented their findings in a customized report template, in which the 4 target chest radiograph findings and the reader confidence of the presence of each finding was recorded. The time taken for reporting each chest radiograph was also recorded. Sensitivity, specificity, and area under the receiver operating characteristic curve (AUROC) were calculated for each target finding.

A total of 6 radiologists (2 attending radiologists, 2 thoracic radiology fellows, and 2 residents) participated in the study. The study involved a total of 497 frontal chest radiographs—247 from the MIMIC-CXR data set (demographic data for patients were not available) and 250 chest radiographs from MGH (mean [SD] age, 63 [16] years; 133 men [53.2%])—from adult patients with and without 4 target findings (pneumonia, nodule, pneumothorax, and pleural effusion). The target findings were found in 351 of 497 chest radiographs. The AI was associated with higher sensitivity for all findings compared with the readers (nodule, 0.816 [95% CI, 0.732-0.882] vs 0.567 [95% CI, 0.524-0.611]; pneumonia, 0.887 [95% CI, 0.834-0.928] vs 0.673 [95% CI, 0.632-0.714]; pleural effusion, 0.872 [95% CI, 0.808-0.921] vs 0.889 [95% CI, 0.862-0.917]; pneumothorax, 0.988 [95% CI, 0.932-1.000] vs. 0.792 [95% CI, 0.756-0.827]). AI-aided interpretation was associated with significantly improved reader sensitivities for all target findings without negative impacts on the specificity. Overall, the AUROCs of readers improved for all 4 target findings, with significant improvements in detection of pneumothorax and nodule. The reporting time with AI was 10% lower than without AI (40.8 vs 36.9 seconds; difference, 3.9 seconds; 95% CI, 2.9-5.2 seconds; P < .001).

These findings suggest that AI-aided interpretation was associated with improved reader performance and efficiency in identifying significant thoracic findings on a chest radiograph.

A chest radiograph is a diagnostic imaging tool used to determine pathologies involving the lungs, airways, heart, pleura, chest wall, and vessels. According to the World Health Organization, chest X-rays constitute 40% of the 3.6 billion radiographies worldwide. This study determined if using the Lunit INSIGHT CXR, an Artificial Intelligence (AI) algorithm, could be more accurate and better executed than medical personnel. Even though this study was used to determine only four lung pathologies, it was proved that the AI algorithm achieved a higher sensitivity rate than the technician with AI help and even higher than the technician alone. This showed that our patients will benefit if we include more pathologies (affecting the heart and pleura) in the software. It will be cost-effective and won’t waste time for the patients while waiting for the technician reports.