FDA Cleared Imbio an AI programs for scanning lung diseases; Neuromuscular Tongue Stimulator for Snoring & Vitls Platform for Remote Patient Monitoring

December 1, 20221977-1981

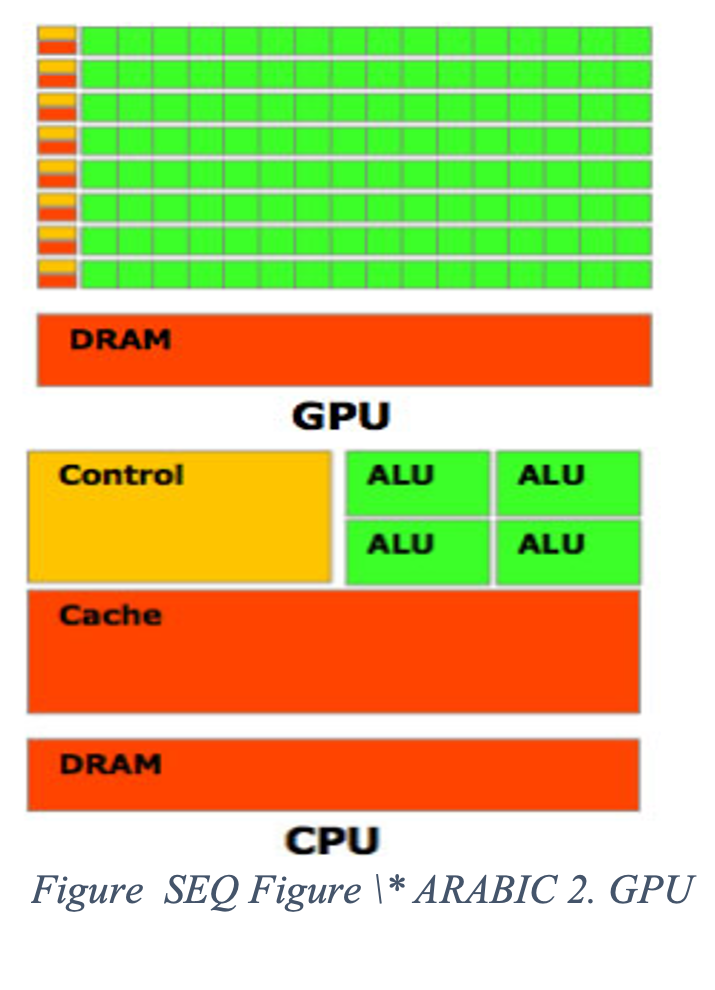

January 1, 2023Physical Requirements of Artificial Intelligence

Research in AI is currently focused on creating energy-efficient Machine Learning systems that do not sacrifice much accuracy due to memory constraints...