FDA Cleared Imbio an AI programs for scanning lung diseases; Neuromuscular Tongue Stimulator for Snoring & Vitls Platform for Remote Patient Monitoring

December 1, 2022

1977-1981

January 1, 2023Physical Requirements of Artificial Intelligence

Machine Learning (ML) is a fascinating area of research that provides innumerable possibilities and applications that require both hardware design and software development. Creating suitable hardware for the software takes into consideration the accuracy, energy, throughput, and cost necessities.(1) Data movement has been important during the development of AI, but it is also extremely energy-demanding. Trying to balance the need for data movement with accuracy, throughput, and cost has been the reason for creating several alternatives involving software architecture and memory hardware.(1)

Machine Learning (ML) is a fascinating area of research that provides innumerable possibilities and applications that require both hardware design and software development. Creating suitable hardware for the software takes into consideration the accuracy, energy, throughput, and cost necessities.(1) Data movement has been important during the development of AI, but it is also extremely energy-demanding.

Trying to balance the need for data movement with accuracy, throughput, and cost has been the reason for creating several alternatives involving software architecture and memory hardware.(1)

Accuracy in an ML model requires identifying patterns and relationships between variables in a given data set, so the larger the dataset, the more chances there are for better accuracy. Programmability is also an important factor to consider since the weights need to change once the environment changes. Programmability and high dimensionality require immense amounts of data creation, movement, and computation.(1)

Many ML designs use memory systems that combine smaller on-chip and larger off-chip memories to store the intermediate activations and weight parameters; therefore, values can be reused in the on-chip memory avoiding accesses to slow off-chip memory that causes large energy expenditure.(2)

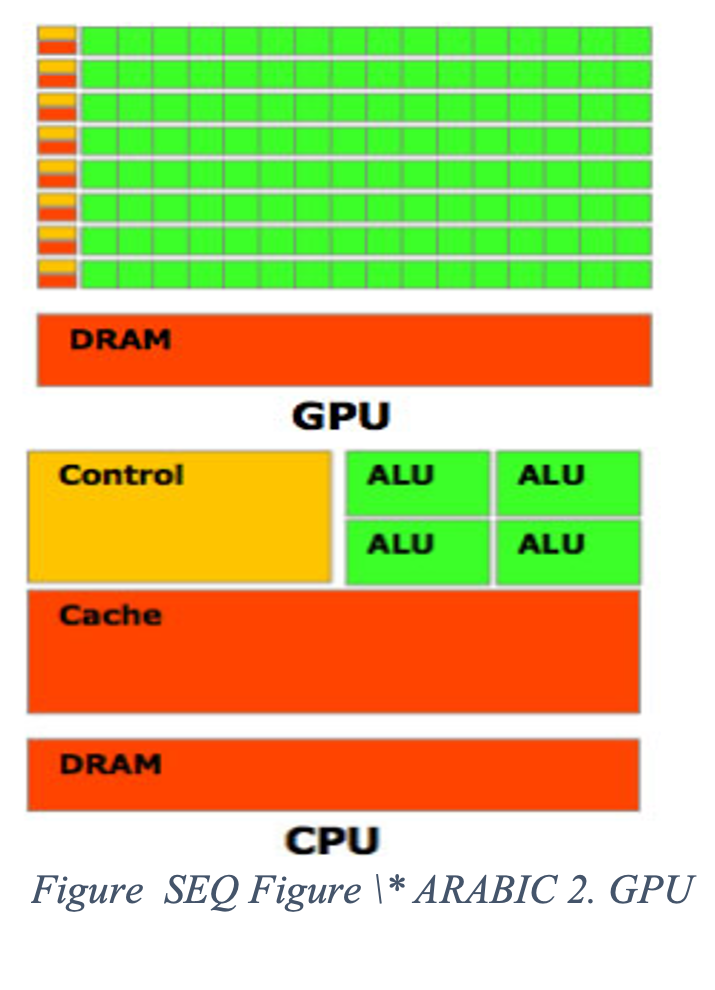

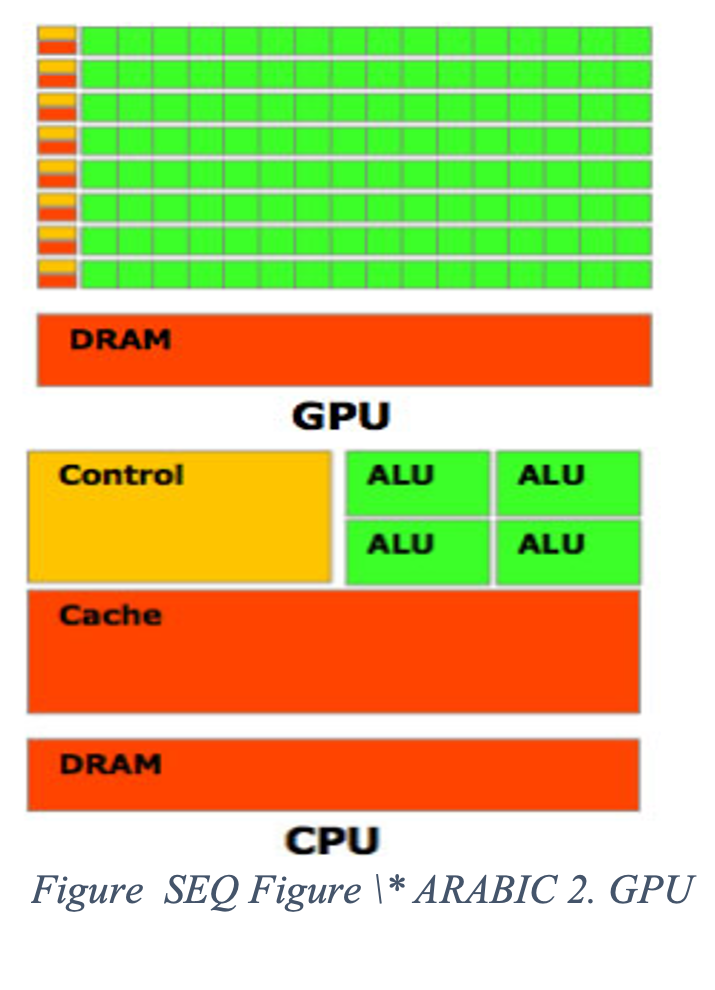

To address this growing and confining issue, several methods have been the subject of research; some of them are redefining Central Processing Units (CPUs) and Graphics Processing Units (GPUs) Platforms and using Accelerators. In addition, some joint algorithm-hardware designs have taken place with some sacrifices in Precision and considering some room for Sparsity and Compression.(1)

Accuracy in an ML model requires identifying patterns and relationships between variables in a given data set, so the larger the dataset, the more chances there are for better accuracy. Programmability is also an important factor to consider since the weights need to change once the environment changes. Programmability and high dimensionality require immense amounts of data creation, movement, and computation.(1)

Many ML designs use memory systems that combine smaller on-chip and larger off-chip memories to store the intermediate activations and weight parameters; therefore, values can be reused in the on-chip memory avoiding accesses to slow off-chip memory that causes large energy expenditure.(2)

To address this growing and confining issue, several methods have been the subject of research; some of them are redefining Central Processing Units (CPUs) and Graphics Processing Units (GPUs) Platforms and using Accelerators. In addition, some joint algorithm-hardware designs have taken place with some sacrifices in Precision and considering some room for Sparsity and Compression.(1)

Central Processing Units (CPUs) and Graphics Processing Units (GPUs) use Single Instruction – Multiple Data (SIMD) or Single Instruction – Multiple Threads (SIMT) to perform Multiple-Accumulates (MACs) in parallel. Classifications are characterized by a matrix multiplication on these platforms. This multiplication is linked to the storage hierarchy of CPUs and GPUs; this can be optimized and sped up by applying transforms to reduce the number of multiplications.(1)

Accelerators allow for data movement optimization; their main goal is to reduce accesses from the most expensive levels of memory hierarchy primarily by promoting data reuse.(1)

CPUs and GPUs usually have a programmable size of 32 or 64 bits which must remain for training. Still, fixed-point representations can considerably lower the bit width during inference, increasing savings in area and energy.(1) Some hand-crafted approaches have been able to reduce the bit width to 16 bits without having a drastic impact on accuracy.(3)

For Support Vector Machines (SVM) classification, the goal is to obtain resulting weights that are sparse for a 2x drop in multiplications. Image inputs need to be pre-processed for a 24% reduction in energy consumption.(1)

For Deep Neural Networks (DNN) a process called pruning (removing small weights with minimal impact on the output) is used to remove weights based on energy consumption, thus, attaining increased speed and decreased energy expenditure.(1)

When most units or weights are off most of the time, the hardware becomes event-driven, and only the activated units consume resources.(4)

Compression can be used to minimize data movement and storage costs. Compression without losses can be used to decrease the frequency of data transfer on and off-chip. Lossy compression can also be used on weights and feature vectors; this way they can be stored on-chip at reduced costs.(1)

This being the big data era, more data has been created in the last five years than at any other time in history. That is why using the data more efficiently has become important while trying to save energy and space usage.(1)The future may need differentiable programming that can simultaneously be interactive, flexible, efficient, and dynamic.(4)

References